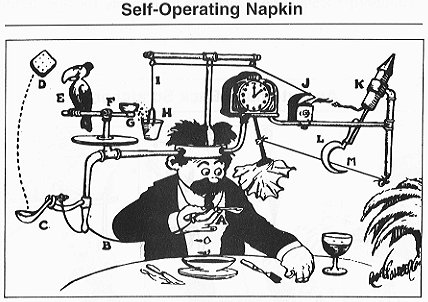

Garbage In, Garbage Out

Muffley: But this is fantastic, Strangelove. How can it be triggered automatically?

Strangelove: Well, it's remarkably simple to do that. When you merely wish to bury bombs, there is no limit to the size. After that they are connected to a gigantic complex of computers. Now then, a specific and clearly defined set of circumstances, under which the bombs are to be exploded, is programmed into a tape memory bank.

Raise your hand if you remember phone books. How did you find a person in a phone book? You could just start on page one, going through the book one listing at a time until you found who you were looking for. Phone books are conveniently alphabetized, however, so a better way would be to pick a point about halfway through, open it up, compare what you see to the name you’re looking for, and then pick a point halfway through whichever half contains the name and repeat the process. You’d keep doing this until you found the right page, at which point you could start a similar search of the listings therein.

This is called a binary search and the time it takes to locate the correct page is, in the worst case, log(2) n, where n is the number of pages. I’m sorry, I didn’t warn you there’d be math, but no worries, I’ll do it for you. If you have a phone book with a thousand pages, and it takes you one second to make a determination each time you pick your new halfway point, you can narrow down to the correct page in about ten seconds.1

That’s not bad! I’m not sure people appreciate what a stroke of genius alphabetization is. If there was no order to the pages and you picked them out one at a time or randomly, it would take, on average, five hundred seconds (a bit over eight minutes) to find a page. Or almost seventeen minutes in the worst case! Merely arranging the pages according to an Egyptian technology from almost four thousand years ago produces a hundred-fold improvement in search time. That’s amazing.

Binary search is an algorithm—a procedure for solving a problem. The word “algorithm” has taken a few body blows in the last decade or so, as it has found itself presiding over a host of questionable business practices by companies hovering on the brink of monopoly power, like a comedian invited to perform at a dinner party who discovers that everyone in attendance is wearing three-piece suits with mysterious bulges and obsequiously stuffing hundred-dollar bills into the pockets of the wait staff. We all talk about the Google algorithm or the YouTube algorithm or the Amazon algorithm; it’s not that these things aren’t algorithms—they are—but they are much more expansive than what was traditionally thought of as an algorithm until we needed a word to describe the processes by which large tech companies direct, filter, and shape our communications. So it goes, but maybe we need a new word to refer to those beautiful little puzzle solvers like binary search or bubble sort or linear programming. Algorithms, writ small, are hammers and wrenches. The Google algorithm is a sprawling behemoth, encompassing not just what its countless computers are doing, but also the company policies that leave Chinese users with a smaller index than the rest of us.

Dave: How would you account for this discrepancy between you and the twin 9000?

HAL: Well, I don’t think there is any question about it. It can only be attributable to human error. This sort of thing has cropped up before, and it has always been due to human error.

Frank: Listen HAL. There has never been any instance at all of a computer error occurring in the 9000 series, has there?

HAL: None whatsoever, Frank. The 9000 series has a perfect operational record.

Frank: Well of course I know all the wonderful achievements of the 9000 series, but, uh, are you certain there has never been any case of even the most insignificant computer error?

HAL: None whatsoever, Frank. Quite honestly, I wouldn’t worry myself about that.

I don’t know whether the general public has yet come to grips with the fact that algorithms are not merely the gnomic operations of computers acting on their strange cold alien intelligence according to prerogatives barely comprehensible even to the high clerics of code that program them in rooms dimly lit by the viridescent glow of gibberish text cascading down black glass voids. There have been stirrings around facial recognition technology, especially in light of revelations in the science press that Black faces are less discernible to computers than White ones. Why is this so? The answer is quite simple: the bias of programmers and—probably more important—their masters.

Computers, it’s worth remembering, are machines. Tech companies have struggled mightily to convince us that they are “information appliances” or even some species of subhuman servants. But just because you package a computer in a slick black plastic box and give it voice recognition capabilities, well, sorry, but it’s still just a machine—a universal symbol manipulation machine, to borrow Alan Turing’s phrase—but a machine nonetheless. When someone drives their car into a crowd of people, we don’t blame the car. Likewise, when a computer can’t tell the difference between two Black people, we shouldn’t blame the computer.

Spotting bias in algorithms is kind of a fun game, because it tells you everything about the entity that makes—or is—the algorithm. It tells you what is really important to them.

The other night my wife and I were watching an Allman Brothers concert2 on YouTube. If you’re like me you’ve been in your house digesting terabytes of video entertainment for the past year, and you may have noticed that YouTube has gotten fairly aggressive with its advertising lately. The thing is, they drop ads into long videos with absolutely no respect for where they happen to fall. It’s not quite random; the frequency increases as the video goes on—this is YouTube’s version of giving you the first hit for free. Anyway, nothing kills the mood as quickly as an ad for prolapse relief in the middle of a Dickey Betts guitar solo.

This got me to thinking—and I realize there’s only so much I can criticize YouTube when they’re giving me all this stuff for free—one thing that’s undeniable is that YouTube doesn’t give one tiny shit about art. If artistic integrity was important to them, they could fix this; it wouldn’t be that difficult to identify the beginnings and ends of songs and put the ads there. But YouTube is in the content-delivery business, and content is not about songs or speeches or even Minecraft speedruns. Content is in fact content-free. It’s a uniform gray gruel in which the only meaningful qualities are the length and whether there are nipples.

Luke: I can eat fifty eggs.

Dragline: Nobody can eat fifty eggs.

Society Red: You just said he could eat anything.

Dragline: Did you ever eat fifty eggs?

Luke: Nobody ever eat fifty eggs.

Prisoner: Hey, Babalugats. We got a bet here.

Dragline: My boy says he can eat fifty eggs, he can eat fifty eggs.

Loudmouth Steve: Yeah, but in how long?

Luke: A hour.3

Once you can see the biases in corporate algorithms, you can start to appreciate the biases in the algorithms we use to order our society as a whole. For instance, there is the inverted wealth generation algorithm, which can be characterized by the old saw, “the first million is the hardest.” This is true: the richer you get, the more opportunities you have to get richer still. If you’ve got thirty bucks in your pocket you can maybe buy a case of water and sell it outside a ballpark. If you’ve got thirty million bucks in your pocket you can maybe build a brewery on the corner and kick all the water guys out. Life gets cheaper as you get richer too: a millionaire can afford insurance against the sort of unexpected catastrophic events that would bankrupt a hundred- or thousandaire.

True story: Several years ago I was pulled over for running a red light, and I showed up in court with an iPad video illustrating the fact that the yellow light at the intersection was burned out, leaving drivers without warning that the signal was changing. I strode into court like a Greek god, brandishing my iPad in the full confidence of its persuasive power to upend this miscarriage of justice. But I never had a chance to show to anyone because my options, as underlined by the judge in a stentorian tone that could be described as “shouting,” were limited to either pleading guilty and paying a $250 fine or pleading not guilty and taking my case before a jury. This was a traffic violation, remember—not assault or fraud, not even a school shooting. If I lost the trial I would have to pay the original fine plus court costs, totaling at least a thousand bucks. There was no one in the courtroom who could advise me on whether doing this would be clever or colossally stupid and obviously I was not going to retain a lawyer over a matter of $250. I know I was right, but the system is not designed to divine right and wrong—only to extract fines. Traffic court, at least as it’s designed in Georgia, is an algorithm for hoovering money out the population. In this light, I have a lot more respect for the YouTube algorithm, because they’ll drive you nuts and chop of your Talking Heads when it’s most inconvenient, but at least they give you bursts of entertainment. Traffic court gives you the option of not receiving a worse beating.

Anyway, I was “rich” enough to merit ownership of a credit card, onto which I placed the fine, swaggering at my dizzying economic power. What if I was rocking a minimum wage from Taco Bell instead? A full-time minimum wage employee in Georgia nets slightly over fifteen grand a year, assuming she works fifty-two weeks and forty hours a week, which is a massive stretch if you know anything about minimum wage jobs. A $250 fine is 1.6% of that. Thomas Fanning, the CEO of the Southern Company, is paid $1,389,616 a year. The red-light fine is only 0.02% of his salary. Tom could run eighty red lights before he reaches parity with the peon serving him his Crunchwrap Supreme®, and even then he’d still have $1,369,616 left for Nacho Fries BellGrande®.

Alternately, Tom could have put that $20k into the S&P500 last year and he would have netted a cool $3,690.

Wealth begets wealth. It’s pretty much built into the system we’ve created; the algorithm of society. But it doesn’t have to be that way. An algorithm is a procedure for solving a problem. We solve a lot of problems as a society, but we ignore a lot of problems as a society as well, and observing which problems do not merit a tweak in the algorithm tells you a lot about the biases of the system. We haven’t solved systemic racism, for instance, because, on the whole, our system is biased to ignore or disbelieve in systemic racism. This does not mean there aren’t lots of individuals who don’t ignore or disbelieve it, but the fact that there are demonstrates why we must rely common, societal mechanisms for solving systemic problems—because they are systemic and not simply individual. We can send cat pictures instantly to practically anywhere in the world, and that’s OK, I’m not knocking that—it’s a great and abiding joy. But cat picture sending was not caused by individuals changing their behavior; it was caused by massive technical investment by our whole society. Don’t tell me we couldn’t solve systemic racism or economic injustice if our system valued those problems. It’d be a snap.

What I don’t and probably never will understand is why our society has the biases it does. We all have an inherent sense of justice, and I can prove it. Ever play Dungeons and Dragons? On its surface D&D is a sort of shared storytelling game in which players act as characters in a fantasy world conjured up by a sort of arbitrator-player called the dungeon master. The game’s algorithm is heavily weighted toward determining the outcomes of events using dice—so essentially D&D is just a stochastic model of a world both similar and dissimilar to our own. Really it’s a whole collection of models—this is the reason I quit playing it. Once it got to the point where my fellow players and I were spending all of our time digging through rulebooks in search of the table of values that modeled, say, the effects of inclement weather on plate armor or the number of hit points of damage incurred per round by a critical slicing wound versus a blunt force wound, I decided my time was better spent elsewhere. But I still often think about the biases in the game, many of which are explicitly aimed at making feel fair to the players. There are a range of challenges and rewards available to players, and they are carefully calibrated to allow for play that is neither too difficult nor too easy.

Consider, for example, experience. Experience is a quantitative value assigned to every player character. It can be increased by various amounts by defeating enemies, collecting treasure, and accomplishing quests posed by the dungeon master. The amount of each increase reflects the difficulty of each task undertaken, e.g. killing a dragon is worth more than killing a cockroach (excepting giant, fire-breathing cockroaches of course). On reaching a certain experience threshold, the player’s character “levels up,” acquiring new skills, powers, spells, or whatever, and generally improving their combat worthiness. It’s a pretty simple system, but with the key bias that advancement is not linear. Each level is harder to reach than the last.

If you’ve never played D&D, but you have played a video game made in the last thirty years, you’ve probably run into some variation of this idea. It’s ubiquitous, and with good reason: it’s fair. When you’re getting started you should have at least some opportunities to catch up with others, and when you’re flogging the game with a meat tenderizer it should take some real effort to keep pushing onward. The opposite is frustrating for the former and boring for the latter.

I honestly just don’t get why graduated difficulty isn’t part of our algorithm—it’s part of natural law; it’s why animals don’t just grow until they can blot out the sun. Computers are machines and humans are animals. Why are we exempt from this? Are we, in fact? I’m inclined to think the answer to that is no.

One of my favorite algorithms is something called linear programming. It’s a method for optimizing an equation subject to a series of constraints. Let me give you a simple example.

Let’s say you run a chocolate company and you make three products: the Mini Chocobox, which sells for $3 and uses one unit each of chocolate, plastic, and cardboard; the Regular Chocobox, which costs $5 and uses two units of chocolate and two units of plastic; and the Coronary Chocobox, which costs $9 and uses three units of chocolate and one each of plastic and cardboard. Each unit of chocolate, plastic, and cardboard costs $1.28, $0.74, and $0.35, respectively.

Tell me real quick, if you have $400 to spend on products for sale, what combination of Minis, Regulars, and Coronaries will produce the highest profit?

I’m not going to go into how linear programming works, but you can get a library of code in just about any computer language that will perform the algorithm and give you an answer that that question in pretty short order, even with a lot more variables than in my example. Think about that: it’s not that you couldn’t struggle and flail and eventually figure out an answer—maybe even the right one—but this elegant algorithm gives you an efficient way to do it, and it’s available, for free, to anyone who wants to figure out how to use it.

This is the most interesting distinction I can see between algorithms and Algorithms®—classic mathematical algorithms give us pure, unadulterated efficiency, a means of living in the world without taxing it beyond its capacity, while the mechanisms of commerce and society use efficiency as a tool to accomplish other ends, ends which subvert the very gifts we’ve been given by technology, and ends which would be invisible were it not for the marks they leave on the data that passes through them.

Log(2) n, if you’ve forgotten your high school math, is the exponent of 2 required to produce n. So for instance, log(2) 8 is 3 because 2^3 is 8. Log(2) 1000 is 9.965784284662087...

You’re welcome.

In case you just fell off a tomato truck, the quotes are from Dr. Strangelove, 2001, and Cool Hand Luke. They’re all great films and you should run go watch them immediately.